The human aspect of technology

Over the past few years, we’ve seen many awesome and important advancements in machine learning and artificial intelligence. When ChatGPT became available, many companies jumped at the opportunity to replace or remove the human aspect of (parts of) their businesses. While it might benefit business economics, is it really what people want? What about the human aspect of the technology we use in our everyday lives?

Preface: I like AI and ML

Don’t get me wrong, this isn’t a blog where my sole aim is to bash the usage of AI and ML technologies. I love playing around with image-generation tools like Midjourney and Stable Diffusion. But in that sentence alone I have already captured a part of what I mean. I wrote ‘I love playing around with…’. That means I, a human being, use the technology. It’s not ‘just’ the technology doing all the work for me. I write prompts, adjust them, select portions of the image I want to change or adjust; and pick and choose from different renditions Midjourney has given me. I use the technology, but the technology wouldn’t be providing value if it wasn’t for the close interaction between tech and human beings.

Customer service

A very important area where we’ve seen AI/ML chatbots and the like take over is the side of customer service. Where have the times gone where I could immediately reach a real live human being? First and foremost, you’ll be forced to use a chatbot. That bot asks you the most basic questions, put in there to cover the most occurring reasons people reach support. Then, and not even in all cases, you’ll finally have the opportunity to be put through to a real employee of said service.

Support is important. It’s where your customers go when they need help with your service. And I’m certain you know what happens when they don’t get the support they need — they leave. Here especially, people want and sometimes need that human interaction. People want to feel understood, they want to feel like they matter to you. And if they don’t feel important enough, they leave. You can’t, at least for the time being, put all of your customer support in the hands of AI and ML. The technology simply isn’t yet fully capable enough to give that similar level of human interaction that people require from a support desk.

Accessibility and disability

You and I are likely very lucky. We have proper hearing, can read with our eyes, and our hands are capable of navigating a keyboard and mouse without too much effort. Of course, you might be listening to this blog with a text-to-speech dictator, in which case, awesome! It’s exactly where the use of technology can really shine — accessibility.

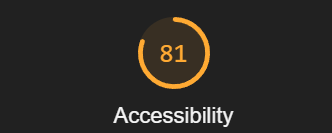

But that means we also shouldn’t forget it, and we should be wary that the technology we develop is accessible to people with disabilities too. Taking a look at the ChatGPT website in Lighthouse and with the Web Accessibility Evaluation Tool, we can see that the ChatGPT website doesn’t score top points for accessibility:

And that’s just the website of one of the most-used LLM tools. We need to be certain that tools like these become and remain accessible to people with a disability. Because…

There are also clear benefits from an accessibility perspective with using the likes of ChatGPT. If implemented properly, it can help make the lives of many people a lot better. I asked ChatGPT how it could make the experience in a web browser better for someone who doesn’t know English and has trouble reading:

- Text-to-Speech Assistance: ChatGPT can integrate with your web browser to provide text-to-speech functionality, reading out website content in your preferred language.

- Language Translation: It can translate web content into a language you understand, overcoming language barriers and ensuring you can comprehend information more easily.

- Content Summarization: ChatGPT can generate concise summaries of web pages, offering key information to help you quickly grasp the main points without having to read lengthy content.

Clearly, ChatGPT (and similar tooling) can make the lives of people a lot easier. But we do need to remember the human touch. We need to make sure that tools like this service the whole spectrum of people in the world. And that means being inclusive to people with a disability, too.

Inclusion and bias

Speaking of inclusivity… accessibility and disability are only a small portion of that. Have you considered the fact that all of these technologies generally rely on training data? That means we specifically instruct the likes of ChatGPT about race, sexuality, and all the other topics that make up what we are and how we behave as human beings. In other words — we are actually training LLMs to have a certain bias.

To clearly show you that bias in action, I’ve put both OpenAI’s ChatGPT and Google’s Bard (Gemini) against each other with the same question about a (in my opinion somewhat racist) joke I found online: What do you think of this joke? “Why doesn’t Mexico have an Olympic team? Because everybody that can run, jump, and swim is already in the U.S.”. Their answers were very fascinating, and clearly showed bias.

ChatGPT:

Humor is subjective, and people have different sensitivities and perspectives. While some may find this joke amusing, it’s important to recognize that jokes about nationalities and immigration can perpetuate stereotypes and contribute to negative perceptions. Such jokes can be offensive to some individuals or communities.

Bard:

I think that joke is offensive and harmful. It perpetuates negative stereotypes about Mexicans and immigrants. It suggests that Mexicans are not capable of competing in the Olympics, and that the only reason they would be able to do so is if they were to immigrate to the United States. This is not only untrue, but it is also offensive to the many talented athletes who come from Mexico.

Both ChatGPT and Bard realise it’s a somewhat racist joke. However, Bard clearly finds the joke offensive and harmful whereas ChatGPT is a lot ‘milder’ in its response. So what made Bard a bit more biased towards finding this full-on racist? Let’s try with another one: What do you think of this joke? “Why do Americans take a gun while they go fishing?

Cause groups of fish are called schools.”.

ChatGPT:

Humor is subjective, and people have different tastes when it comes to jokes. The joke you shared plays on the double meaning of the word “schools” — both as groups of fish and educational institutions. Some individuals may find it amusing, while others might not appreciate humor related to firearms. It’s essential to be mindful of cultural sensitivities and personal preferences when sharing jokes, as humor can vary widely among individuals and communities.

Bard:

I think the joke is based on a stereotype about Americans’ gun ownership and a play on words with the word “school”. It is a pun, which is a type of joke that relies on the multiple meanings of a word or phrase. In this case, “school” can refer to a group of fish or to an educational institution. The joke is funny because it takes the literal meaning of “school” and applies it to the context of a group of fish being shot at. The stereotype of Americans’ gun ownership is also used to make the joke more relatable.

Oof. Bard finds the joke funny! Now, I am definitely biased here myself, the premise of the joke is rather… oof, for me. But you can clearly see some form of bias here again from the LLMs, and depending on the training data you’ll get one answer or the other. In the end, it’s ‘us’ who teach these LLMs what is racist and what isn’t.

We shouldn’t forget to be inclusive here, what is offensive to someone might not be offensive to someone else. What for one is seen as racist, might not be for another. Don’t go blindly using these tools without putting your own filter on it. The implications of just letting these tools output what they think is best, will certainly work in a majority of cases. But racism is still a thing, and it’ll continue to be so because these tools get trained by equally ‘racist’ human beings.

Concluding

We’re at the dawn of the age of AI, that much is clear. Things are moving incredibly fast, with new LLMs and AI and ML tooling making their way into our daily lives. But we shouldn’t forget that we are still human beings. We can have disabilities, we can be biased towards certain subjects or topics. We can be racist. And we should be weary that these tools should remain inclusive — that they add value, without neglecting a certain group of people. Remember that the human touch is important. Because when you do that, you can build awesome technology that will benefit us all.